#006 Open Weights (Mistral, LLama) vs Closed Models (chatGPT , CoPilot)

Mistral is truly open source with its Apache 2.0 License

FORMAT of the article (3 parts)

PART 1 will explain as ELI5 (very simple language no fluff)

PART 2 will explain as thrilling story

PART 3 the nitty gritty that will help you show off that you really know the subject with code

Here it goes:

PART 1: ELI5 (very simple language no fluff)

🍫 Imagine you love chocolate cake

Your friend Meta (LLaMA) or Mistral says:

“Here’s the recipe (the model weights). You can bake this cake at home, change the frosting, add sprinkles, or even open your own bakery.”

That recipe = open weights.

You have the exact instructions to recreate the cake.

🛒 Now imagine another friend, Google (Gemini) or OpenAI (ChatGPT)

They say:

“You can buy slices of my cake from my store. It’s tasty, but you never get to see the recipe. If you want more, you must pay me again and again.”

That = closed model.

You eat, but you never see the recipe.

⚖️ The Difference

Open weights = You own the recipe → you can run the model yourself, change it, trust it.

Closed models = You just buy cake slices → always tasty, but you depend on the baker.

PART 2 will explain as thrilling story

🕵️♂️ The Story: “The Phantom Claim”

At AXA Insurance, fraud cases had been climbing. A shadowy network was fabricating hybrid EV accident claims, siphoning millions through fake repair shops.

🎭 The Characters

Amelia, a sharp insurance fraud analyst.

Mistral-7B, deployed on-prem, humming in the secure data center.

Closed AI API (Gemini/ChatGPT), a black-box helper.

⚡ The Problem

One morning, Amelia noticed a suspicious cluster of claims:

All from different customers.

But strangely, each accident involved a “rear left battery compartment fire.”

When she asked the closed API for help:

“Summarize patterns in these 500 accident claims.”

It replied with a polished but vague answer:

“These appear consistent with electrical faults in hybrid vehicles. Further investigation recommended.”

No proof. No reasoning. Just words. Amelia frowned. Too generic.

🧩 The Breakthrough with Mistral + XAI

Instead, Amelia turned to Mistral, running inside AXA’s private servers.

She fed it the claims dataset and wrapped it with SHAP (SHapley Additive exPlanations):

import shap

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

# Load mistral

model_name = "mistral-7b"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Example fraud classifier sitting on top of mistral embeddings

def fraud_predictor(data):

# simplified scoring for demo

inputs = tokenizer(data, return_tensors="pt", padding=True, truncation=True)

with torch.no_grad():

logits = model(**inputs).logits

return torch.sigmoid(logits[:, -1, :1]).numpy()

# Explain predictions with SHAP

explainer = shap.Explainer(fraud_predictor, tokenizer)

shap_values = explainer(["rear left battery fire in hybrid claim",

"genuine front bumper accident"])

shap.plots.text(shap_values[0])

🔍 What She Saw

SHAP highlighted “rear left battery” and “same repair shop” as red hot tokens contributing to high fraud probability.

Unlike the black box, Amelia could see WHY Mistral flagged them.

Then she ran LIME to double-check. LIME explained that 90% of the suspicious claims came from three postcodes near the same repair vendor.

🎬 The Climax

Amelia confronted the fraudsters. The repair shop was a front — one office, multiple fake customers, all linked to a single fraud ring.

AXA saved €12 million in payouts.

The board cheered. Amelia whispered to herself:

“The difference between open weights and closed APIs? Transparency. One shines light. The other casts shadows.”

⚖️ Moral of the Story

Closed APIs (Gemini/ChatGPT/Copilot): Quick answers, but opaque.

Mistral + XAI: Slower setup, but auditable, explainable, and regulator-approved.

In high-stakes insurance fraud, seeing the reasoning is as important as the answer.

PART 3 Full technical depth with code

🔓 1. LLaMA (Meta) and Mistral

Open Weights:

Meta’s LLaMA 2 and Mistral release their model weights publicly.

You can download, host, and fine-tune them on your own infrastructure, without relying on Meta or Mistral’s cloud.

Example: People run LLaMA/Mistral on local GPUs, clusters, or even laptops.

Code:

They often release inference/training code (e.g., Hugging Face integrations, tokenizers, architectures).

License Caveats:

LLaMA has a custom license (not OSI-approved). It restricts certain commercial uses (e.g., for companies over a certain size/revenue).

Mistral uses Apache 2.0 for its small models (e.g., 7B), which is far closer to true open source.

Reality Check:

They are not “fully open source” in the strict sense (because of LLaMA’s license).

But practically, you can download, modify, run, and study them → that’s why they’re considered open weights models.

🔒 2. Gemini (Google), ChatGPT (OpenAI), Copilot (Microsoft)

Closed Weights:

You cannot download the model.

The weights (parameters learned during training) are proprietary and hosted by the company.

Access:

You only interact with the models via an API or web interface.

No ability to run them offline or retrain them independently.

Source Code:

The core training and inference code is not released.

Only SDKs or wrappers are provided to access APIs.

Control & Transparency:

You can’t inspect how the model makes decisions.

Updates and performance are fully controlled by the vendor.

👉 Bottom line:

LLaMA/Mistral = “open weights”: not always fully “open source” in the OSI sense, but you can download, host, study, and adapt.

Gemini/ChatGPT/Copilot = “closed”: API-only, no weights, no offline hosting.

If you care about control, transparency, and sovereignty, open weights models are closer to “open source.”

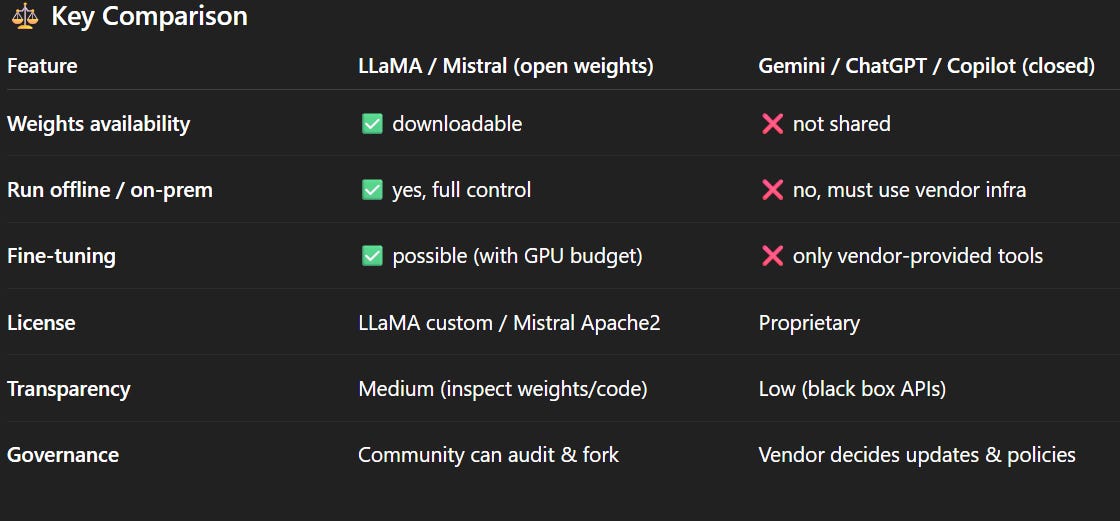

There are more subtle differences between open-weights models (LLaMA, Mistral) and closed models (Gemini, ChatGPT, Copilot) beyond the “weights open or not” point. Let’s unpack them clearly:

🔑 Other Key Differences

👉 Deployment Flexibility

Mistral / LLaMA: You can deploy anywhere — on-prem servers, air-gapped data centers, sovereign clouds, even laptops.

Gemini / ChatGPT / Copilot: Always depend on vendor infrastructure (Google Cloud, Azure, OpenAI servers). No sovereign option.

👉 Data Privacy & Governance

Open Weights: You decide what data goes in; nothing leaves your perimeter. This is critical for regulated industries like insurance, banking, or healthcare.

Closed APIs: Data may transit outside your control, subject to vendor policies, audits, or jurisdictions.

👉 Customization

Open Weights: Full fine-tuning, domain adaptation, and retrieval-augmented generation (RAG) with proprietary datasets. You can hard-bake in your insurance fraud models, underwriting rules, or claims datasets.

Closed APIs: You’re limited to fine-tuning endpoints or embeddings APIs (if allowed), and at extra cost. Much less flexibility.

👉 Transparency & Explainability (XAI)

Open Weights: Since weights and architecture are open, you can apply explainable AI libraries (SHAP, LIME, Captum) directly to Mistral’s internals.

Closed APIs: Black box. You can only explain outputs post-hoc (prompt vs. response), without visibility into why the model chose certain tokens.

👉 Cost & Economics

Open Weights: Higher upfront infra/training cost, but marginal inference is cheap (especially at scale).

Closed APIs: No infra burden, but you pay per call/token forever. For heavy use (millions of queries/day), costs explode.

👉 Ecosystem & Community

Open Weights: Rapid innovation from the open community (e.g., fine-tuned fraud detectors, domain-specific insurance models).

Closed APIs: Innovation controlled by the vendor’s roadmap.

👉 Risk & Support

Open Weights: You take on operational risk — scaling, model collapse, hallucinations. Support is community-based (unless you buy enterprise support from a vendor like Together, Anyscale, or Hugging Face).

Closed APIs: SLA-based support from Google/OpenAI/Microsoft, easier for enterprises wanting guarantees.

👉 Regulatory Acceptance

Open Weights: Easier to prove compliance (auditability, data residency).

Closed APIs: Sometimes disallowed in strict jurisdictions (EU financial regulators, national healthcare systems).

⚖️ Quick Analogy

Mistral / LLaMA = Owning a Car 🚗 → You can drive anywhere, customize it, but you handle maintenance.

Gemini / ChatGPT / Copilot = Using Uber 🚕 → No maintenance, always available, but you can’t change the car or control where it’s stored.

Let’s weave this into your “thrilling insurance fraud + XAI + Mistral story” — where the analyst uses SHAP/LIME on a Mistral model to uncover hidden fraud patterns, while the “black box” closed models leave them in the dark?